Read previous part: iOS 6 Kernel Security 2 - Data Leaking Mitigations and Kernel ASLR

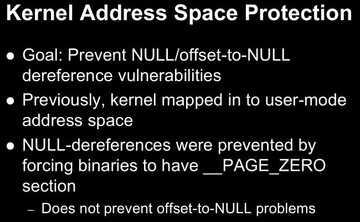

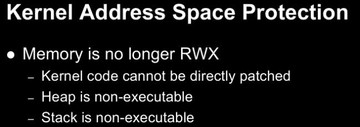

Moving along with the presentation, Mark Dowd and Tarjei Mandt overview kernel address space protection as a technique for preventing iOS 6 vulnerabilities.

NULL-dereferences were actually already prevented in iOS because they forced binaries to have this ‘PAGE_ZERO’ segment that essentially mandated that at least the first page is not mapped into memory. And they actually enforced this, like, if you tried to create a binary, even a signed binary, without that ‘PAGE_ZERO’, it would just kill it because there was an integrity check. However, I guess they felt that wasn’t enough, because I guess there’s potential offset-to-NULL problems whereby if, for example, a ‘realloc’ failed and someone didn’t notice, they might write to an offset in memory that’s high enough that something is mapped there, in which case maybe you could do something.

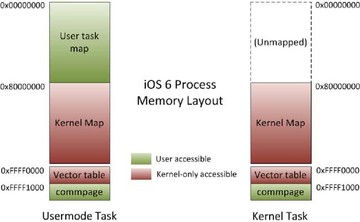

So, now the ‘kernel_task’ has its own address space while it’s executing; there’s no user task visible. They basically transitioned into the kernel tasks address space with the interrupt handlers or when you issue SVC or whatever. And they had to do some special operations to handle the copying to and from user in kernel mode so that they can see both address spaces at the same time. They actually do that by using two translation table base registers for user-mode, where the first one points to the user address space and the second one points to kernel address space. And when the kernel is running they just replace the ttrb0 with just the kernel address space so it can’t actually see user-mode anymore. The end result is user-mode pages are essentially not accessible while executing in kernel mode, so you are not going to run to a situation where you accidentally access them.

This is basically how that looks. You can see the user task has both the user address space and the kernel map. Again, there’s a static commpage that’s actually visible to both the user and the kernel, and when the kernel is running there’s nothing in the lower part of memory.

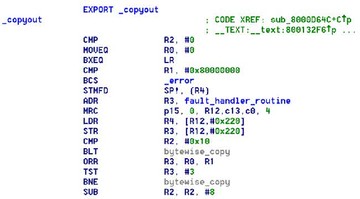

So, when I was looking into these I looked back at iOS 5, and they had this crazy ‘copyin()’ and ‘copyout()’ strategy, where the only validation they did for user-mode pointer was that the user-mode pointer was below kernel memory, that is, it’s in user-mode. But they didn’t check the length at all, and that was crazy because the pointer plus length could be larger than 0x80000000, and then they’d run the risk of inadvertently copying in or copying out of kernel memory while using a copy transition. But basically there was a limitation to the attack, which is, devices had to have greater than 512MB RAM, because I tried mapping the top page in user-mode – didn’t work. I looked into it and eventually discovered that was why. But new devices such as iPad 3 and iPhone 5 that have 1GB RAM will let you map that top of memory in, and then you can do this ‘copyin()’ / ’copyout()’trick.

Here’s the code that does that. You can see that basically there’s no length validation at all. As long as the user-mode pointer starts in user-mode, then apparently that’s fine.

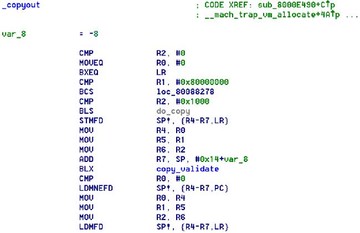

So, iOS 6 actually added a whole bunch of checks. They added integer overflow and signedness checks; conservative maximum length; and making sure that everything is in user-space. However, they are actually still vulnerable to the same bug because if the copy length, set to ‘copyin()’ or ‘copyout()’, is less than 4K, then the pointer plus length check is just skipped for some reason, which means you can read and write to the first page of kernel memory for anything that does a ‘copyin()’ or ‘copyout()’.

So, here’s the code. Again, you can see that there’s a function called ‘copy_validate’ – that’s the thing that actually does everything, but there’s a check right before it, where, if the length is less than 0x1000, then don’t worry about it, it’s probably fine.

So, is there anything in that first page of memory? Well, as we know, initially the kmap offset random allocation used for the kmap randomizing is there, but that’s removed after the first garbage collection. That means necessarily that that first page of memory won’t be in any zones because the zones were allocated while the kmap offset allocation was still in place. So you need to find things that allocate to the kernel map directly, and there are a couple of such things: HFS does it a bunch of times, and actually ‘kalloc()’ does it for blocks larger than 256k – they’ll allocate directly to the kernel map. So it is indeed possible to map the first page of kernel memory in.

So, in order to attack a bug like this, basically, I created a proof-of-concept, created a pipe, and I specified buffer to either ‘copyin()’ or ‘copyout()’ that was, like, in the last page of user-mode memory, and then we would be able to transition over that boundary and either read some data out of kernel memory resulting in useful information leak, or write into the first page of kernel memory causing panic or gaining privileges. One thing that was particularly interesting is, because ‘copyin()’ and ‘copyout()’ are designed to FAULT all the time, if your allocation strategy fails and the memory isn’t mapped like you’d hoped – the kernel is not going to panic, it will just safely return EFAULT. So you can actually try endlessly without causing any damage, and then you can read back the kernel memory, check that the allocation error is the thing that you wanted to be there, and then perform your overwrite. So, that was quite interesting.

Read next part: iOS 6 Kernel Security 4 - Attack Strategies